oci-generative-ai-jet-ui

Accelerating AI Application Deployment Using Cloud Native Strategies

Introduction

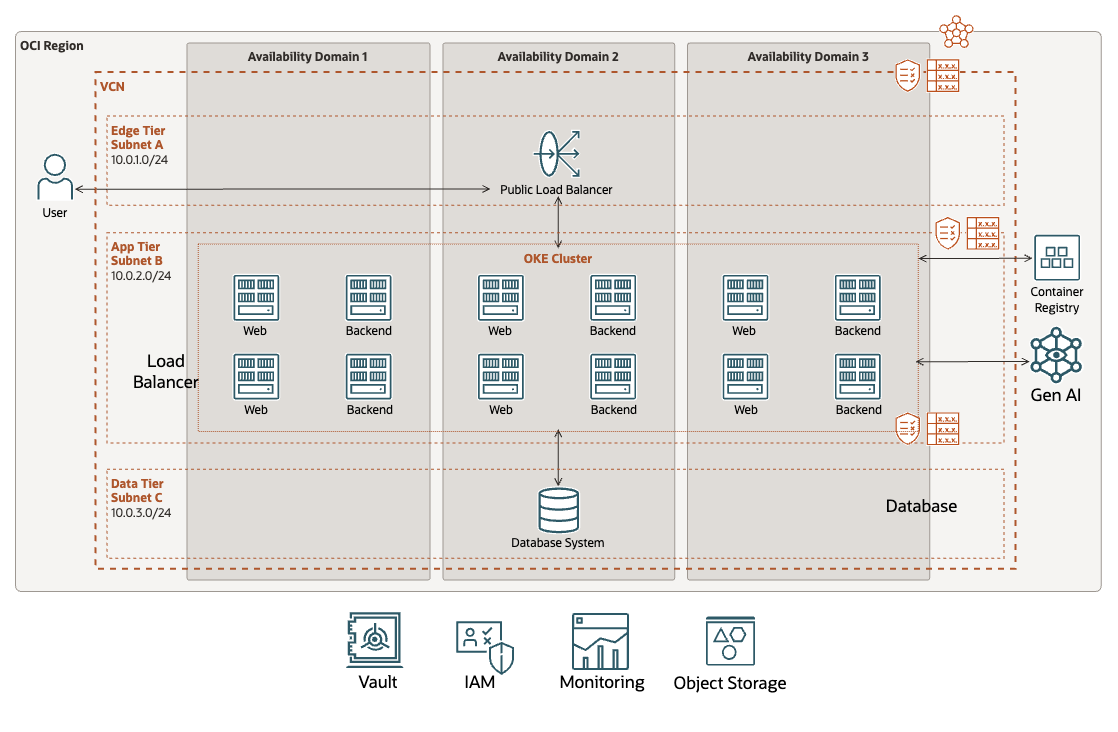

Kubernetes has become the standard for managing containerized applications, and Oracle Cloud Infrastructure Container Engine for Kubernetes (OKE) is a managed Kubernetes service that delivers outstanding cloud reliability. Now, imagine using OKE with AI to create powerful, scalable, highly available AI applications.

This project deploys an AI pipeline with a multipurpose front end for text generation and summarization. The pipeline integrates with a database to track interactions, enabling fine-tuning and performance monitoring for application optimization. It leverages OCI Generative AI APIs on a Kubernetes cluster.

Getting Started

0. Prerequisites and setup

- Oracle Cloud Infrastructure (OCI) Account - sign-up page

- Oracle Cloud Infrastructure (OCI) Generative AI Service - Getting Started with Generative AI

- Oracle Cloud Infrastructure Documentation - Generative AI

- Oracle Cloud Infrastructure (OCI) Generative AI Service SDK - Oracle Cloud Infrastructure Python SDK

- Node v16 - Node homepage

- Oracle JET v15 - Oracle JET Homepage

- OCI Container Engine for Kubernetes — documentation

- Oracle Autonomous Database — documentation

- Spring Boot framework — documentation

Get troubleshoot help on the FAQ

Requirements

You need to be an administrator.

If not should have enough privileges for OKE, Network, and Database services. Plus others like tenancy inspect. See example:

Allow group 'Default'/'GroupName' to inspect tenancies in tenancy

Set Up environment

On Cloud Shell, clone the repository:

git clone https://github.com/oracle-devrel/oci-generative-ai-jet-ui.git

Change to the new folder:

cd oci-generative-ai-jet-ui

Install Node.js 16 on Cloud Shell.

nvm install 18 && nvm use 18

Install dependencies for scripts.

cd scripts/ && npm install && cd ..

Set the environment variables

Generate genai.json file with all environment variables.

npx zx scripts/setenv.mjs

Answer the Compartment name where you want to deploy the infrastructure. Root compartment is the default.

Deploy Infrastructure

Generate terraform.tfvars file for Terraform.

npx zx scripts/tfvars.mjs

cd deploy/terraform

Init Terraform providers:

terraform init

Apply deployment:

terraform apply --auto-approve

cd ../..

Release and create Kustomization files

Build and push images:

npx zx scripts/release.mjs

Create Kustomization files

npx zx scripts/kustom.mjs

Kubernetes Deployment

export KUBECONFIG="$(pwd)/deploy/terraform/generated/kubeconfig"

kubectl cluster-info

kubectl apply -k deploy/k8s/overlays/prod

Run get deploy a few times:

kubectl get deploy -n backend

Wait for all deployments to be Ready and Available.

NAME READY UP-TO-DATE AVAILABLE AGE

backend 1/1 1 1 3m28s

ingress-nginx-controller 1/1 1 1 3m17s

web 1/1 1 1 3m21s

Access your application:

echo $(kubectl get service \

-n backend \

-o jsonpath='{.items[?(@.spec.type=="LoadBalancer")].status.loadBalancer.ingress[0].ip}')

This command will list the Load Balancer services on the

backendnamespace. If the response is an empty string, wait a bit and execute the command again. The Load Balancer takes a bit of time to create the Public IP address.

Paste the Public IP address on your browser and test your new Generative AI website deployed in Kubernetes.

Remember to visit SQL Developer Web on the OCI Console for your Oracle Database and run some queries to investigate the historical of prompts.

SELECT * FROM interactions;

cd ../..

Clean up

Delete Kubernetes components

kubectl delete -k deploy/k8s/overlays/prod

Destroy infrastructure with Terraform.

cd deploy/terraform

terraform destroy -auto-approve

cd ../..

Clean up the artifacts on Object Storage

npx zx scripts/clean.mjs

Local deployment

Run locally with these steps Local